Ongoing Events

Performing Arts x Tech Lab Industry Sharing

Date: Friday, 5 April to 13 April 2024

Time: Various timings

Venue: Annexe Studio, Esplanade – Theatres on the Bay, 1 Esplanade Drive, Singapore 038981

The Industry Sharing is the culmination of the nine-month Performing Arts x Tech Lab which began in 2023. Join us and gain insights into the six project teams’ experimentation process as they share about their Lab journey, and how their experience has sparked discoveries through the intersections between performing arts and technology.

Programme booklet: go.gov.sg/booklet-paxtl-is

The programme features:

Exhibition & Guided Tours - Learn about the motivations and experimentation process of each project team via a digitally enabled exhibition

Demo and Sharing Sessions - Take part and interact with prototype demos, engage in discussions and hear from participants on their explorations integrating technology into their practice

Networking Opportunities - Meet and connect with Lab participants as well as practitioners from the Arts x Tech community to share ideas and seed opportunities for collaboration

Learn first-hand about the projects and hear more from the project teams about their motivations, learnings and journey through the lab, as well as details on their projects that tap on technology such as Artificial Intelligence (AI), machine learning, neural audio synthesis, motion sensors, wearable devices and interactive projection technologies to transform their artistic creation and artistic practice.

For more programme & registration information, visit Performing Arts x Tech Lab 2023/24 | Peatix

About the Performing Arts x Tech Lab 2023 - 2024

The Performing Arts x Tech Lab is a new partnership between the National Arts Council and Esplanade – Theatres on the Bay, with Keio-NUS CUTE Center as technology consultant. The Lab seeks to support innovation and experimentation through seeding collaboration between arts and technology fields. It aims to encourage arts practitioners to explore the possibilities of integrating technology into their practice.

The 2023 edition of the Lab supports practitioners who work in the performing arts or with performance in general (i.e. theatre, dance, music, inter or cross-disciplinary forms, performance practice), who wish to work with technologists for the purposes of experimentation, prototyping or research and development. It is hoped that through the Lab, artists and technologists can collaborate to transform artistic creation, enrich artistic practice or explore new forms of expression.

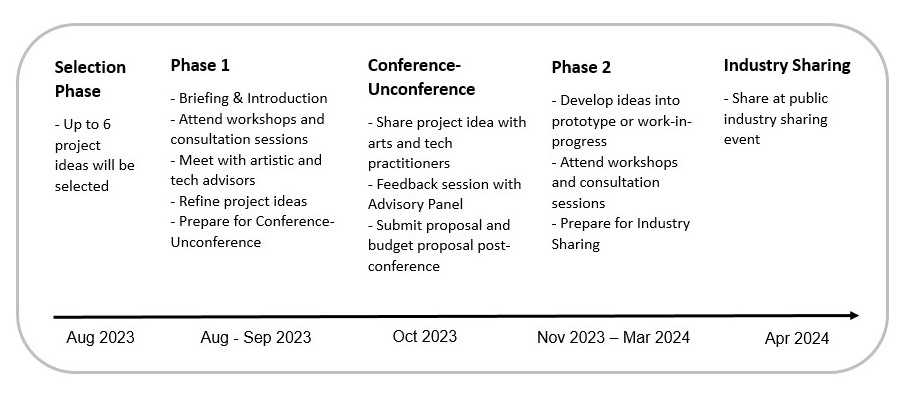

Participants will undergo a nine-month lab, starting from Aug 2023 and ending in Apr 2024. This will take place across two phases, culminating in an Industry Sharing. Each phase will be shaped according to the level of readiness and needs of the participants and customised with consideration towards the participants’ proposals and ideas.

At the end of the Lab, participants will present a public sharing, which can take the form of a work-in-progress, a prototype or sharing of findings by way of a lecture, demonstration or public presentation.

This enhanced edition of the Arts x Tech Lab sees an esteemed local and international advisory panel of experts in performance, arts and technology offering guidance and consultancy to the project teams across the duration of the Lab:

- Toby Coffey, Head of Immersive Storytelling Studio, National Theatre (UK)

- Danny Yung, Founding Member and Co-Artistic Director, Zuni Icosahedron (HK)

- Clarence Ng, Production Project Manager | Akiko Takeshita, Producer of Performing Arts | Mitsuru Tokisato, Artist and Video Engineer, Yamaguchi Center for Arts and Media (JPN)

- Ho Tzu Nyen, Artist (SG)

More about the Advisory Panel here

The Lab's Offerings

- Funding Support to develop a seed idea or hypothesis

- 1-to-1 Consultations with technology consultants and an international Advisory Panel comprised of leading artists, designers and institutions who work at the intersection of arts, performance and technology

- Networking opportunities with potential collaborators

- Workshops by leading experts in their fields

- Peer learning opportunities to learn from like-minded artists and technologists

Performing Arts x Tech Lab 2023 - 2024 Participants

Project title: Theories of Motion

Project lead: Alina Ling

The project explores interactive technology in the design of immersive projection environments to enhance existing performance frameworks. This involves the use of technological instruments to explore the question of control and autonomy in performance, enabling the development of new forms of artistic expression through two parts:

- an integrated wearable system that allows performers to control multimodal effects in real-time

- audio-visual & interactive design in a projection environment for immersive performance

Through the development of interactive, tangible interfaces that generate or control scenographic and audio-visual elements, the project sets out to experiment with physical, virtual and actuated expressions of the body for a designed performance:

- Physical expressions - Interactive systems that determine or restrict performer’s movements to create choreography and influence spatial elements

- Virtual expressions - Body-tracking or mapping technologies and/or sensor-based systems to generate visual compositions with real-time data

- Actuated expressions - Interactive devices that use gestural and relative positional data of performer to control mechanical objects

This project seeks to find new forms of expression through the fusion of light, projection, sound, moving bodies and objects within an immersive space. Stipulating that there are new possibilities for story-telling and performance within immersive environments, the goal is to navigate interactive and projection techniques as well as design a hybrid, multimodal set around this premise.

Project title: perfor.ml

Project lead: Ang Kia Yee (Feelers)

perfor.ml is a technology and performance research project. Led by artist collective Feelers, it seeks to integrate technology to be an equal participant in performance. perfor.ml aims to develop a performance methodology that allows a machine to act as an equal, live performer with human performers.

The project will explore the use of spatial data as input, and sonic + kinetic material as output. Spatial data will first be collected from the walking pathways of human performers in rehearsal, before producing a heat map that will be used to train the machine using an averaging model. Together with the physical body of the machine which comprises sonic and kinetic elements, the machine will be trained to take on a performing role, leveraging sensory interactions with the human performers and performance environment. Over time, the machine performer will be capable of generating its own choices that affect the performance sequence and trigger scenes by executing cues with its physical body, such as a sonic utterance or kinetic gesture, which the human performer follows.

Being able to make these live choices that significantly reshapes the performance sequence gives the machine a level of influence and control over the performance that begins to level out the relationship between human and machine performers, allowing them to operate as equals in live performance space. The experimental remixing of the story also gives the narrative a playful anarchy that we’re interested to think more about as we conduct our research.

Project title: Dancing the Algorithm

Project lead: Dapheny Chen

"Dancing the algorithm" refers to the act of embodying or expressing an algorithmic process through movement and choreography. The system transforms movement scores into an ever-evolving interactive digital platform through the translation of the logical or computational aspects of an algorithm. When dancing the algorithm, dancers explore the concepts, patterns, or instructions of the algorithm and manifest them through their bodies. They may interpret the algorithm's logic, structure, or computational steps using movement vocabulary, spatial relationships, and timing. The goal is to bring the algorithm to life through the expressive capabilities of the human body and concurrently serve as a tool for archival that expands and evolves organically with time.

Dancing the algorithm offers a unique way to merge the worlds of dance and computation, bridging the abstract concepts of algorithms with the physicality and expressiveness of the human body. It allows for a creative exploration of movement and provides a tangible and embodied understanding of algorithmic processes. More importantly, it is not limited to ‘dance’ but brings to existence the unique ‘movement DNA’ that resides in each individual.

Project title: DOTS 2.0

Project lead: Isabella Chiam

DOTS 2.0 is a theatrical project aimed at contemporising the way young audiences engage with theatre by bringing together digital and live elements to enhance the overall audience experience. It consists of two parts:

- a live visual and non-verbal performance focusing on dynamic movement, striking visual design and interactive projection as its main medium. The performer’s movements will be tracked by sensors, and as the narrative develops, his/her movements begin to form paint strokes that are projected onto the walls

- an immersive interactive experience where the same sensors will pick up the audience’s movements and allow them to express their creativity using their whole bodies and create their own work of art

We are also considering an app that allows us to extend the post-show experience. With this project, we are seeking to develop ways in which physical movement and visual art can interact with motion capture technology & multi-media projection. We want to explore how this interweaving of mediums can effectively support the themes of creative empowerment & resilience and further improve audience engagement. By combining performance together with digital technology, we seek to create a multi-disciplinary, contemporary theatrical experience for young audiences through DOTS 2.0.

Project title: Verge 2.0

Project lead: James Lye

Verge 2.0 is the sequel of Verge - a multidisciplinary project exploring the convergence of dance and interactive technologies to provide dancers the agency to control and manipulate sounds, lights and visuals. It focuses on improvement and enhancement of existing software and hardware prototypes while fostering collaboration with dancers from different cultural backgrounds and genres. The project will culminate in a lecture-performance, showcasing the research and development outcomes. It will also help inform the configuration of Verge 2.0 into a more portable format for future showcases and events.

Project title: The Sound of Stories

Project lead: Kamini Ramachandran

‘The Sound of Stories’ is a project that aims to revolutionise our engagement with sound as computational data and statistical phenomena by leveraging deep learning and neural audio synthesis techniques. With a focus on real-time experiences and machine compositions, this project seeks to transform the way we perceive, analyse, and interact with sound across various contexts.

The project introduces a real-time performance aspect where local stories are transmitted in multiple languages, voiced by the same storyteller. This innovative approach not only preserves intangible cultural heritage but also expands the reach of performances to multilingual audiences. Audiences of different languages can come together to experience the same story, witnessing the plot unfold uniquely for each individual through the use of A.I. generated hallucinations.

After the performances, the project utilises neural audio synthesis techniques to analyse tonalities, emotions, and other acoustic features. This machine-made interpretation informs the generation of unique compositions that embody the collective experience, creating an immersive and dynamic sonic landscape accessible to anyone online.

Through real-time performances transmitting local stories in multiple languages and the generation of unique A.I. driven compositions, this project pushes the boundaries of creativity, preserves cultural narratives, and offers exciting possibilities for artists to enhance their work and engage with audiences in new and immersive ways.

If you are interested in any of the Performing Arts x Tech Lab projects, please reach out to the contacts included below for more information.

Arts x Tech Lab 2021 - 2022

The inaugural run of the NAC Arts x Tech Lab took place in 2021. More information about the projects and key learnings from the first edition can be found in the e-publication and video series below.

Watch Arts x Tech Bytes Series

Contact Information

If you have any questions, please contact:

Althea Giron : althea_giron_from.tp@nac.gov.sg

Chrystal Ho : chrystal_ho@nac.gov.sg

Eileen Chia : eileen_chia_from.tp@nac.gov.sg